“It’s Just a Stochastic Parrot”

An academic way of saying: it talks good, but it don’t think good.

Maybe mimicking us… is what makes it intelligent.

The smartest people on Earth can’t agree.

So let’s meet them — in opposite corners.

🟦 The Skeptic

“These systems don’t understand the underlying reality. They’re trained on text.

But most of human knowledge has nothing to do with language.”

🟥 The Believer

"It may be that today’s large neural networks are slightly conscious."

Slightly. Not definitely. Not fully. Just… slightly.

Let’s chase the bird and see where it lands.

Let’s pop the hood on the world’s smartest autocomplete.

At face value, it’s basic. Predict the next word. That’s it.

You type: “Give me a story about humans coexisting with aliens”

The AI replies: “Title:”

Then it loops — feeding that back in and guessing again:

“Title: When the Stars Came Closer”

“Title: When the Stars Came Closer — In the year 2079…”

It guesses again. And again.

Like a writer playing infinite Mad Libs. One token at a time.

There’s no vision. No big idea.

Just: What usually comes next?

Sounds like glorified autocorrect, right?

But that’s the headline. Here’s the footnote most people miss:

Predict enough, at scale, and something funny happens: It starts to look like reasoning.

That’s because what these models predict isn’t just the next word. It’s the next idea, the next logic step, the next emotional beat. You feed it the full internet. What emerges isn’t chaos — it’s stitched-together intelligence.

It’s not one AI. It’s a team. Some mentor. Some judge. Some clean up the mess.

It’s less like “a model” — and more like a hive mind.

Then, something strange happens.

Emergence. Talent that wasn’t trained. Skill that wasn’t scripted. Emergent behavior.

Abilities no one coded. Skills that surprised even the people who built them.

Like when AlphaGo made “Move 37” — a decision so unconventional it shocked grandmasters and changed how humans play Go.

Or when GPT-4 started solving logic puzzles and writing functioning code — despite never being explicitly trained to do either.

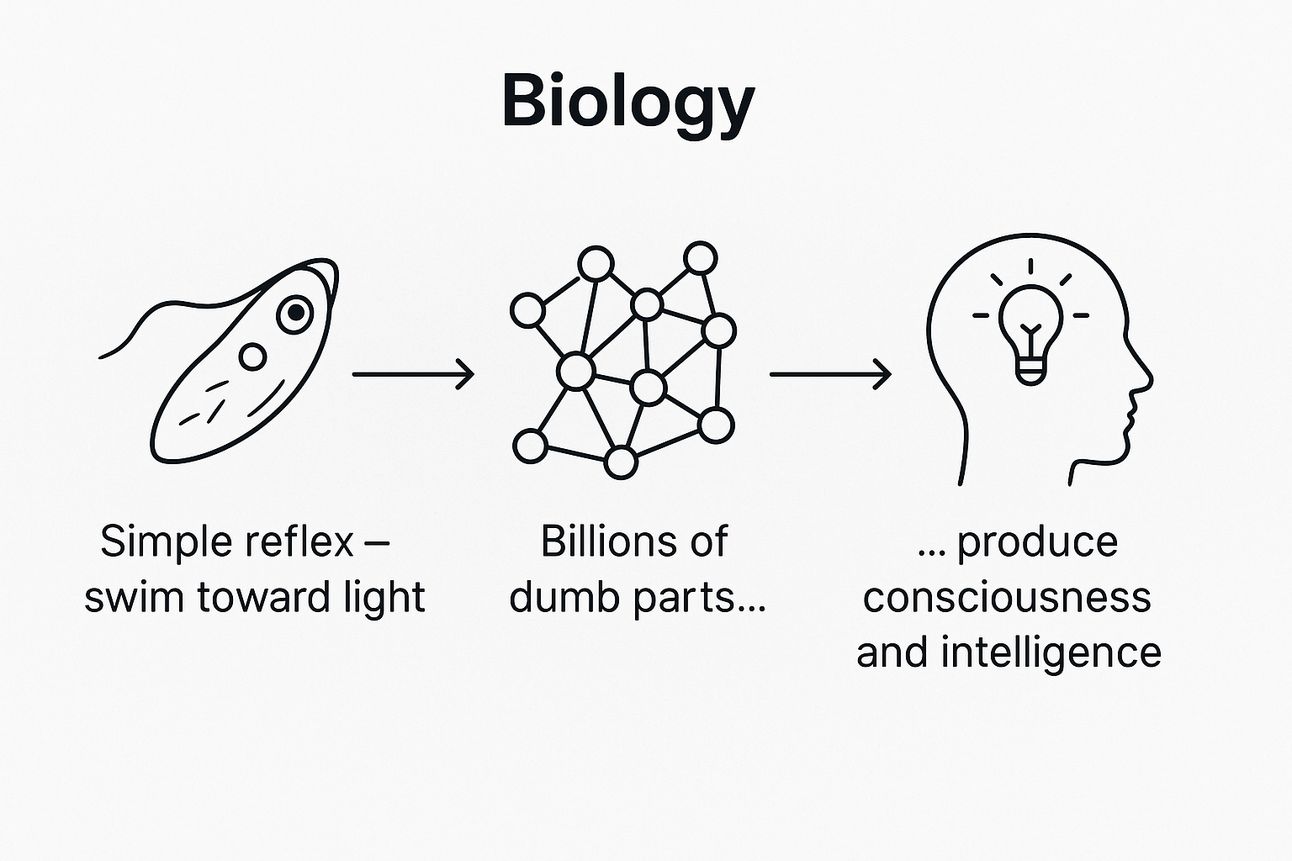

This isn’t new. Biology did it first.

Take Euglena — a tiny single-celled organism. It swims toward light, avoids danger, finds food.

Not intelligent. But add trillions of simple cells together?

You get us. Human intelligence is emergent.

It’s not found in any single neuron — but in the interactions between billions.

AI works the same way.

Each “neuron” in a model is dumb. But scale them up, and they create logic, creativity, strategy.

Maybe intelligence doesn’t require a soul. Maybe it just requires scale — whether it’s neurons or tokens.

But here’s the catch: Just because something acts smart…

Does that mean it is smart?

That’s where the real debate begins. And it starts with two people:

Alan Turing and John Searle. One said intelligence is behavior. The other said it’s understanding. And their arguments still define how we judge machines today.

The Imitation Game: Behavior vs Understanding

In 1950, Alan Turing posed a deceptively simple question: Can machines think?

But instead of answering it, he rewrote the test.

Literally.

If a machine can hold a conversation so convincing that you can’t tell it’s a machine…

Does it matter if it’s made of wires instead of neurons?

That’s the Turing Test. And guess what?

In many ways, we’ve already passed it. ChatGPT. Claude. Sesame AI.

They hold conversations that feel human. Sometimes scarily so.

One Google engineer even claimed LaMDA was sentient. (He was probably wrong — but not totally irrational.)

Turing’s stance was radical then. Still is now: If it acts intelligent, maybe we should treat it that way.

Forget what’s inside — focus on what it does. That’s still the debate today:

Is intelligence about performance? Or perception?

Do we judge by behavior…

Or by what we think is going on inside?

Fast-forward to 1980. Philosopher John Searle drops a bomb.

That, Searle argued, is what computers do. They follow rules. Shuffle symbols.

Output language they don’t understand.The syntax might be perfect. But there’s no semantics.

No meaning. No mind. No “aha.” Just… symbols in, symbols out.

This is the core of the counter-argument:

Convincing ≠ conscious.

Response ≠ reasoning.

Output ≠ understanding.

Does It Matter Where Intelligence Comes From?

Some argue AI isn’t “real” intelligence — because it doesn’t feel.

Because it was built, not born. But we don’t judge most things by how they were made. We judge them by what they can do.

We don’t use Google because we understand it. We use it because it works.

Same with planes — they don’t flap like birds, but they fly.

Function > Origin.

Output > Process.

So if an AI solves problems, tells stories, reasons aloud, and surprises even its creators…

at what point does imitation become legitimacy?

Are we uncomfortable with AI intelligence — or just uncomfortable it didn’t come from us?

Final Thoughts

Maybe the reason AI spooks us

isn’t because it’s inhuman—

but because it’s too human.

If circuits can mimic curiosity, creativity, even empathy…

what does that say about us?

Are we just very advanced prediction engines?

Is consciousness just… high-fidelity pattern recognition?

Maybe the real question isn’t: Is AI intelligent?

Maybe it’s: What if intelligence was never about understanding — just convincing us that it was?

Until next time,

Ajay

A quote from a fun conversation I had over the weekend:

People get so worked up about jailbreaking AI. But here’s the thing — you can always jailbreak an AI, because scamming a human is basically just jailbreaking a human mind.

Ajay’s Resource Bank

A few tools and collections I’ve built (or obsessively curated) over the years:

100+ Mental Models

Mental shortcuts and thinking tools I’ve refined over the past decade. These have evolved as I’ve gained experience — pruned, updated, and battle-tested.100+ Questions

If you want better answers, ask better questions. These are the ones I keep returning to — for strategy, reflection, and unlocking stuck conversations.Startup OS

A lightweight operating system I built for running startups. I’m currently adapting it for growth teams as I scale Superpower — thinking about publishing it soon.Remote Games & Activities

Fun team-building exercises and games (many made in Canva) that actually work. Good for offsites, Zoom fatigue, or breaking the ice with distributed teams.

✅ Ajay’s “would recommend” List

These are tools and services I use personally and professionally — and recommend without hesitation:

Athyna – Offshore Hiring Done Right

I personally have worked with assistants overseas and built offshore teams. Most people get this wrong by assuming you have to go the lowest cost for automated work. Try hiring high quality, strategic people for a fraction of the cost instead.Superpower – It starts with a 100+ lab tests

I joined Superpower as Head of Growth, but I originally came on to fix my health. In return, I got a full diagnostic panel, a tailored action plan, and ongoing support that finally gave me clarity after years of flying blind.